The advent of the AI ACT

The AI Act is a proposal for a regulation from the European Commission tabled on April 4, 2021 with the aim of regulating artificial intelligence systems. It will be accepted at the latest during 2023 and will attempt to establish a harmony of rules within the European Union on the issue of Artificial Intelligence processing.

The emergence of Chatgpt, scandals involving Tesla cars, and similar events have brought Artificial Intelligence systems back into the spotlight. Although recent stories have contributed to the ongoing discussions, the concept of AI, initially introduced by John McCarthy in 1960, has long been a topic of interest. However, it is worth noting that legislative efforts regarding AI, especially concerning the proposed European regulation known as the AI act, are relatively recent.

The AI Act is a proposal for a regulation from the European Commission tabled on April 4, 2021 with the aim of regulating artificial intelligence systems. It will be accepted at the latest during 2023 and will attempt to establish a harmony of rules within the European Union on the issue of Artificial Intelligence processing.

This text in particular seeks to provide harmonization of terms, such as Artificial Intelligence system, which is defined as software developed by means of one or more techniques and approaches and which can, for a given set of human-defined objectives, generate results such as content, predictions, recommendations or decisions influencing the environments with which it interacts.

Article 2 of the regulation establishes its scope of application in all European Union countries, and covers providers of artificial intelligence systems in the European Union, their users, and finally providers of artificial intelligence systems whose services are used solely within the Union. As a result, the text will concern any system wishing to operate in the European Union, and will affect the development of artificial intelligence worldwide.

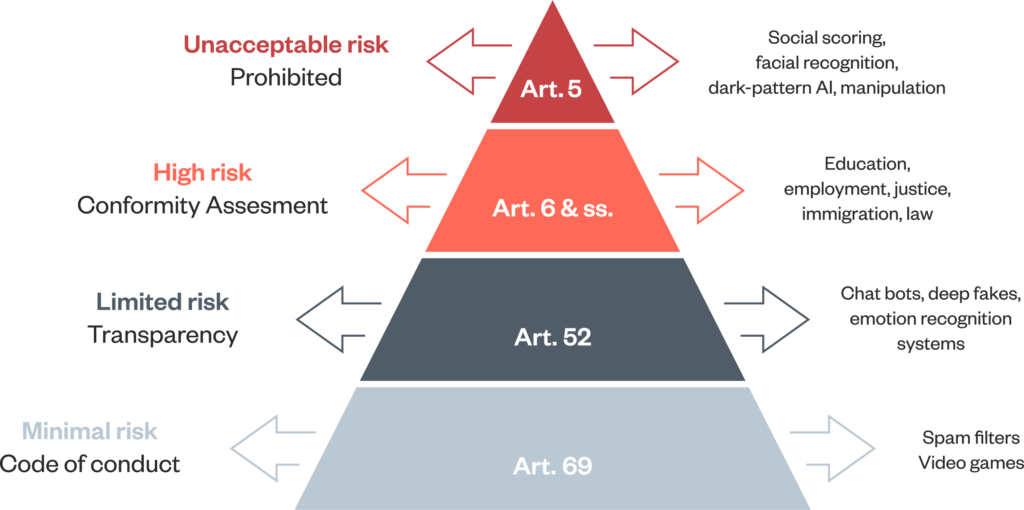

Within this regulation, various categories are established to facilitate enhanced control over AI, with one notable exception being the exclusion of AI technologies employed for military purposes from the scope of this text.

Prohibited Artificial Intelligence Systems

With the aim of regulating artificial intelligence systems, the proposed law states that, firstly, a list of prohibited AI systems must be drawn up. This is set out in Article 5 of the proposal, and determines which systems are prohibited:

- AI systems using subliminal techniques below the threshold of consciousness to alter behavior in ways that may cause physical or psychological harm.

- AI systems that exploit the vulnerabilities of a group of people for age or for disability.

- AI systems generating a social rating on a natural person’s trustworthiness based on social behavior or characteristics if they cause :

- Prejudicial or unfavorable treatment of individuals or entire groups in a social context that is dissociated from the context in which the data is collected.

- Prejudicial or unfavourable treatment of individuals or entire groups that is unjustified or disproportionate to their social behaviour.

- AI systems using real-time remote biometric identification systems in public spaces unless :

- They are used for targeted searches for victims of crime, such as missing children.

- They help prevent a specific, substantial and imminent threat to the life or safety of individuals, or the prevention of a terrorist attack.

- They enable the detection, location, identification or prosecution of the perpetrator or suspect of a criminal offence punishable by a maximum of at least 3 years.

It should be noted that the nature of the situation is taken into account, as are the seriousness and consequences of the use of this system on rights and freedoms. This means that the principle of proportionality is respected. the reaction is adapted to the risk of harm, negative influence or infringement of fundamental rights.

Authorization from the national authority in charge is required before these tools can be used. The national authority in question therefore has the right to decide whether AI systems should be banned in its country. We have seen an example in Italy, where ChatGPT was banned from use throughout the nation, or where its use was restricted by the governments of various countries.

Assessment by national authorities means that European Union member states can still judge and be free to block these Artificial Intelligence systems.

The category of High-Risk Artificial Intelligence systems

The IA Act created a category of artificial intelligence systems, the high-risk artificial intelligence system category. This is based on 2 cumulative conditions :

- An AI system that is intended to be used as a safety component of a product covered by Union harmonization legislation.

- An AI system is part of the safety of a product or is itself subject to a third-party conformity assessment in view of the product being placed on the market or put into service in accordance with European Union harmonization legislation.

In other words, AI systems covered by European Union standards may be considered high-risk AI systems, provided they are covered by protection or regulation under a European Union harmonization legislative act, as listed in Annex 2 of the proposal which defines these harmonization legislative acts. The purpose of this limitation of qualification is to avoid extending the scope of this text, so as to be able to limit and control the areas covered by it as the European Union’s harmonization work progresses.

As a result, Annex 3 of the text contains a list of high-risk AI systems which, according to Article 7 of the proposal, can be updated as long as 2 conditions are met :

- AI systems are used in the areas listed in Annex 3.

- AI systems present a risk of harm to health or safety, or a risk of negative impact on fundamental rights.

The list in Annex 3 of the proposal currently includes :

- AI systems for the biometric identification and categorization of individuals, with a particular focus on “real-time” and “post-record” remote biometric identification of individuals.`

- AI systems for managing and operating critical infrastructures such as road traffic, water, gas, heating and electricity supplies

- Education and professional training systems, such as the assignment of individuals to professional training institutions.

- Systems for assessing students in vocational education and training establishments, and for assessing participants in a test to enter these educational establishments.

- Employment and workforce management systems and access to self-employment, such as the recruitment or selection of individuals for job offers, and particularly the filtering of applications, or AI used for promotion and dismissal decisions.

- Systems dealing with access and entitlement to essential private services, public services and social benefits, for example, analyzing a person’s reliability and solvency in order to provide them with access to these services, as well as being able to revoke, reduce or recover these benefits and services.

- AI systems designed for use by law enforcement authorities, such as for analyzing the reliability of evidence during criminal investigations or prosecutions.

- AI systems managing migration, asylum and border controls, such as immigration risk analysis, analysis of an individual’s emotional state, or assessment of asylum applications.

- AI systems dealing with the administration of justice and democratic processes, such as the interpretation of facts and law and its application to a concrete set of facts.

The classification of high-risk AI system leads to some obligations such as the establishment of a risk management system or even the establishment of supplementary steps or an increase in the transparency of the information of the user.

Requirement for High-Risk AI systems

It’s not just the high-risk Artificial Intelligence systems that are covered by the regulation, but also suppliers and users who have obligations to ensure that AI systems do not generate risk.

But first, we need to address the requirements applicable to these high-risk Artificial Intelligence systems. To do this, we need to establish a risk management system.

A risk management system is established, implemented, documented and maintained for high-risk AI systems in accordance with article 9 of the proposal. This consists of a process that runs throughout the entire lifecycle of a high-risk AI system, and includes elements such as :

- Identification and analysis of known and foreseeable risks associated with each high-risk AI system.

- Estimation and analysis of risks likely to arise when the system is used as intended.

- Assessment of other potential risks.

- The adoption of appropriate risk management measures, i.e. these measures must :

- Eliminate or reduce risks as far as possible.

- Where necessary, implement mitigation and control measures for risks that cannot be eliminated.

- Provide users with adequate information, in particular on the risks involved, or training on how to use the AI system.

Of course, when setting up this system, the technical knowledge, experience, education and training that can be expected of the user are taken into account when judging the correct implementation of these risk management systems. High-risk AI systems are tested to ensure that the most appropriate risk management system is in place, depending on the purpose of the AI system. To this end, ZIWIT’s HTTPCS solutions can be used to protect your systems and websites against external attacks.

In this sense, we need to add requirements for transparency and the provision of information to users to ensure compliance with supplier and user obligations, such as a user manual in an appropriate digital format with information on the identity of the supplier, but also the characteristics, capabilities and performance limits of the high-risk Artificial Intelligence system.

In addition, information must be added on modifications to the AI system, the human control measures present and the expected lifetime of the high-risk AI system.

Obligations of the user and the AI system provider

A high-risk AI system provider has a large number of obligations. The competent national authorities are responsible for testing and verifying that these obligations are met, and there is an obligation for these suppliers to cooperate with these authorities.

Article 16 lists the supplier’s obligations :

- Artificial Intelligence systems must comply with the quality requirements of article 17 of the regulation.

- The supplier must implement a quality management system for the high-risk AI system.

- He must also draw up the system’s technical documentation.

- The high-risk AI system must undergo the applicable conformity assessment procedure before being placed on the market or put into service.

- In addition, it must maintain the logs generated automatically by the high-risk Artificial Intelligence system if it has control over them.

- It must comply with registration requirements.

- The supplier takes the necessary corrective action if the high-risk Artificial Intelligence system does not comply with the requested requirements.

- It must also inform the competent national authorities of the Member States in which the system is set up, as well as of any non-compliance and the measures taken to remedy it.

- The supplier places the CE mark on the high-risk AI system to indicate compliance with European regulations.

- Finally, at the request of a competent national authority, the supplier must provide proof that the high-risk AI system complies with the requirements of the regulation.

Finally, the regulation legislates on dangerous AI systems to enable harmonized control of them by establishing high-risk and prohibited categories, while leaving the assessment of systems to the competent national authorities. This gives them a certain degree of freedom to act in the face of the dangers posed by developing AI systems, as well as facilitating inter-state bans and controls on these AI systems.